background agents are a larp

if you've been trying to manage 20 claude code sessions in tmux, or using cursor's background agents — i have bad news for you: you're probably doing yourself more harm than good.

there are a few names that people are giving to this idea right now:

- background agents

- ambient agents

- async agents

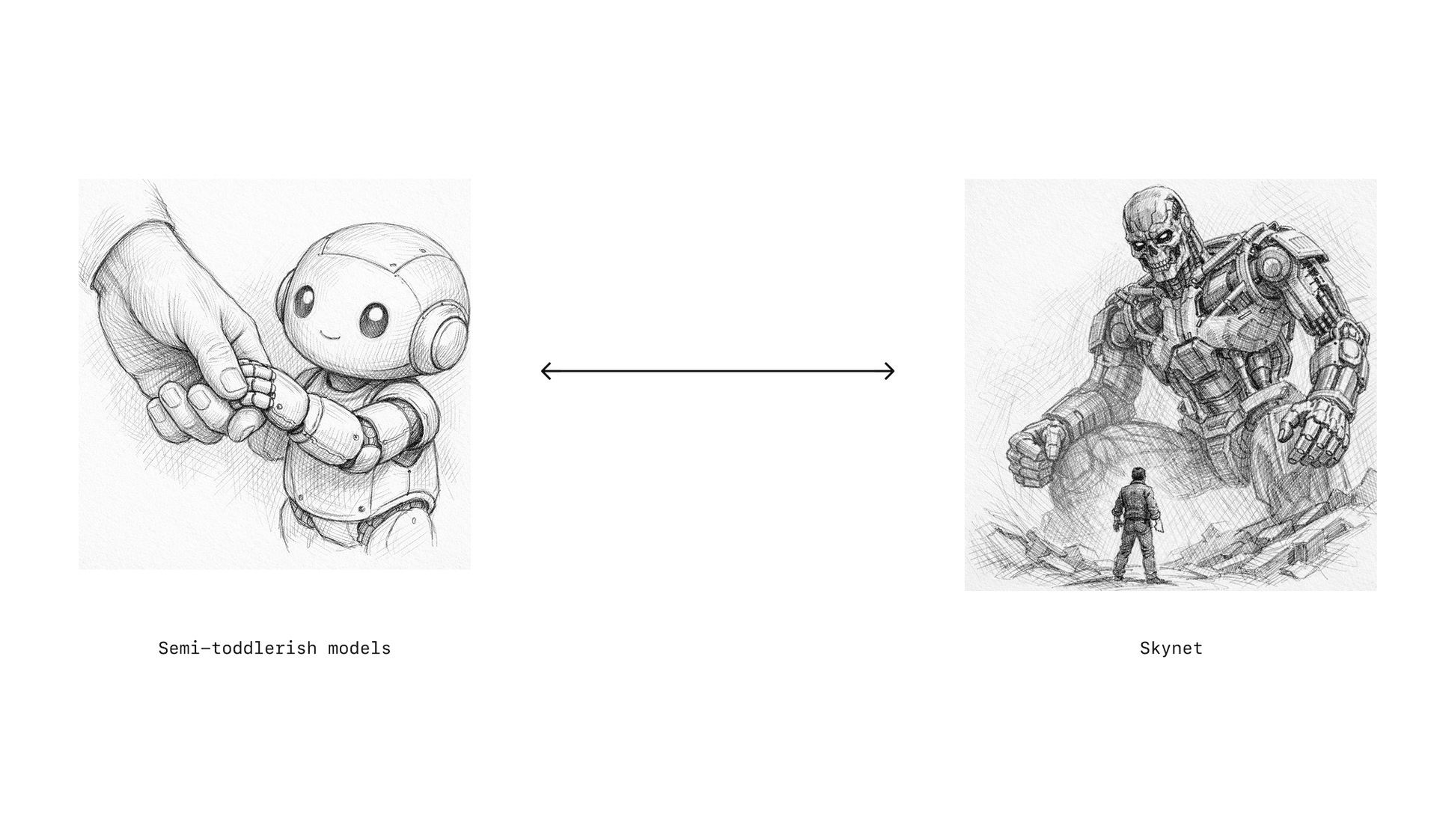

i like to think of them as landing somewhere on Karpathy's autonomy slider:

- partial autonomy: human observes partial outputs → intervenes early → constrains trajectory

- fuller autonomy: agent commits to a trajectory → human evaluates only after substantial work exists (work continues without waiting for feedback or results)

thinking about the degree of autonomy is clearer than "background agents", because that tends to mean it runs in the cloud. for the purposes of this discussion, only the degree of autonomy matters.

the core distinction isn't where the agent runs but rather the feedback topology. in other words, how tight is the feedback loop on decision making?

moving right means batching more decisions before a human corrects course. that increases both the delay and the amount of output you have to verify and unwind if the early assumptions are wrong.

why delayed feedback compounds errors

today, agents make non-trivial mistakes with meaningful probability. early assumptions are often wrong or underspecified, and downstream code depends on them.

in this world, background work is actively harmful. why?

when feedback is delayed:

- the agent continues generating code conditioned on its own incorrect assumptions.

- wrong decisions accrete dependent structure: APIs, data models, etc.

- by the time a human looks, the result is merely delayed confusion.

agents are highly sensitive to initial specifications, so small early ambiguities are amplified over long sequences of actions, making outcomes effectively unpredictable and late correction disproportionately expensive.

but you might think: “If the agent goes down a wrong path, I’ll just reject the PR and rerun it.”

that only works if rejection is stateless and obvious. but in order to confidently reject, the human figure out:

- Is this wrong, or just unfamiliar?

- Is it wrong at the root, or salvageable?

- Is it misaligned with the spec, or did the spec change?

and in order to do that, the human must:

- reload context into memory, recall constraints + initial intent / request (remember we are now at a later point in time)

- reconstruct the agent’s intent

- diagnose why the approach is wrong (vs merely unfamiliar)

- decide it’s irrecoverable rather than salvageable

it's a mistake to think that you can manage 10-20 agents in realtime and multitask across them effectively. a quick survey of the literature on this point proves this: comparative studies consistently find that multitasking increases error rates and memory lapses for cognitive work — people get slower, make more mistakes, and lose context compared to doing the same work sequentially. so while you might feel like a god-tier programming wizard managing 15 tmux sessions, Gandalf is unlikely to be impressed.

a simple cost model

call this diagnosis cost. formally, if:

- is the probability of a bad trajectory.

- if you want to connect this to per-step error: for a task requiring meaningful decisions and per-step error rate , a simple model gives:

so longer autonomous runs raise the chance of going off-trajectory even when is low.

- is the delay before human feedback,

- is the amount of accumulated output / number of decisions the human has to verify,

- is the human cost of diagnosing and rejecting after delay with review load ,

then the expected human cost from wrong paths is:

with non-decreasing in both arguments. in particular, as grows (batch size / review load), decision fatigue makes review slower + more error-prone.

background agents increase , while tight feedback loops minimize it because they prune bad branches early. in a tight loop, the human remembers what they just asked for because the mental state is warm, so rejection is fast + local — the cognitive overhead of context switching is limited.

in other words, background agents only become useful when they can complete an end-to-end trajectory with a low enough probability of “trajectory-level” failure that the expected cost of delayed correction is small.

until models cross the reliability threshold, the winning workflow is boring:

- keep the loop tight for anything with unclear requirements or high leverage decisions.

- steer aggressively, constantly re-referencing the specs + agreed-upon plans.

- invest in automation that makes mistakes cheap to detect: tests, linters, type checks, evals.

the practical rule is simple: until agents are end-to-end reliable, keep autonomy proportional to how cheap it is to detect + undo mistakes.

stay tuned

of course, this will all change very soon. models are being unhobbled at a shocking rate, and we'll have new problems to consider when working in this new mode of autonomy.

more on that to come..